Machine Learning for Trading¶

Ultimately, the goal of active investment management consists in achieving alpha, that is,returns in excess of the benchmark used for evaluation. The fundamental law of active management applies the information ratio (IR) to express the value of active management as the ratio of portfolio returns above the returns of a benchmark, usually an index, to the volatility of those returns. It approximates the information ratio as the product of the information coefficient(IC), which measures the quality of forecast as their correlation with outcomes, and the breadth of a strategy expressed as the square root of the number of bets.

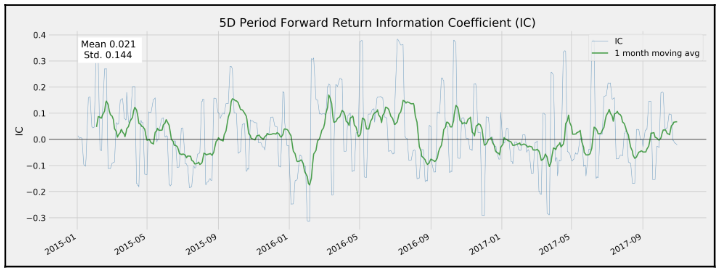

This time series plot shows extended periods with significantly positive moving-average IC. An IC of 0.05 or even 0.1 allows for significant outperformance if there are sufficient opportunities to apply this forecasting skill, as the fundamental law of active management will illustrate:

Hence, the key to generating alpha is forecasting. Successful predictions, in turn, require superior information or a superior ability to process public information. Algorithms facilitate optimization throughout the investment process, from asset allocation to idea-generation, trade execution, and risk management. The use of ML for algorithmic trading,in particular, aims for more efficient use of conventional and alternative data, with the goal of producing both better and more actionable forecasts, hence improving the value of active management.

The return provided by an asset is a function of the uncertainty or risk associated with the financial investment. An equity investment implies, for example, assuming a company's business risk, and a bond investment implies assuming default risk.

Specific risk characteristics predict returns, identifying and forecasting the behavior of these risk factors becomes a primary focus when designing an investment strategy. It yields valuable trading signals and is the key to superior active-management results.

Use Cases of ML for Trading¶

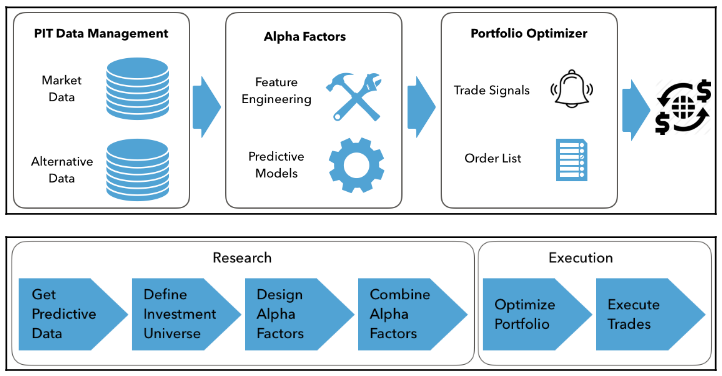

ML extracts signals from a wide range of market, fundamental, and alternative data, and can be applied at all steps of the algorithmic trading-strategy process. Key applications include:

- Data mining to identify patterns and extract features

- Supervised learning to generate risk or alpha factors and generate entry/exit conditions

- Aggregation of individual signals into a strategy

- Allocation of assets according to risk profiles learned by an algorithm

- The testing and evaluation of strategies, including through the use of synthetic data

- The interactive, automated refinement of a strategy using reinforcement learning

Alpha factor research and evaluation¶

Alpha factors are designed to extract signals from data to predict asset returns for a given investment universe over the trading horizon. A factor takes on a single value for each asset when evaluated, but may combine one or several input variables.

- Alpha factors emit entry and exit signals that lead to buy or sell orders, and order execution results in portfolio holdings. The risk profiles of individual positions interact to create a specific portfolio risk profile.

Engineering alpha factors¶

Alpha factors are transformations of market, fundamental, and alternative data that contain predictive signals. They are designed to capture risks that drive asset returns. One set of factors describes fundamental, economy-wide variables such as growth, inflation, volatility, productivity, and demographic risk. Another set consists of tradeable investment styles such as the market portfolio, value-growth investing, and momentum investing.

There are also factors that explain price movements based on the economics or institutional setting of financial markets, or investor behavior, including known biases of this behavior. The economic theory behind factors can be rational, where the factors have high returns over the long run to compensate for their low returns during bad times, or behavioral, where factor risk premiums result from the possibly biased, or not entirely rational behavior of agents that is not arbitraged away.

Important factor categories¶

In an idealized world, categories of risk factors should be independent of each other(orthogonal), yield positive risk premia, and form a complete set that spans all dimension sof risk and explains the systematic risks for assets in a given class. In practice, these requirements will hold only approximately.

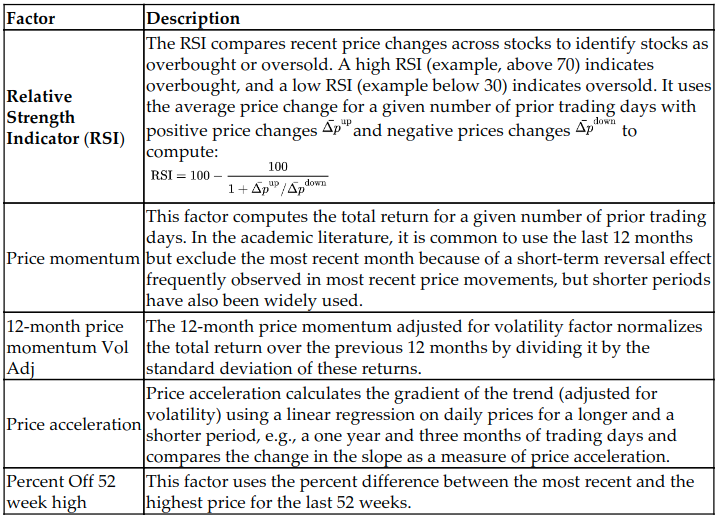

Momentum and sentiment factors¶

Momentum investing follows the adage: the trend is your friend or let your winners run. Momentum risk factors are designed to go long assets that have performed well while going short assets with poor performance over a certain period. Such price momentum would defy the hypothesis of efficient markets which states that past price returns alone cannot predict future performance.

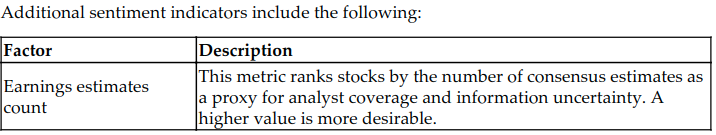

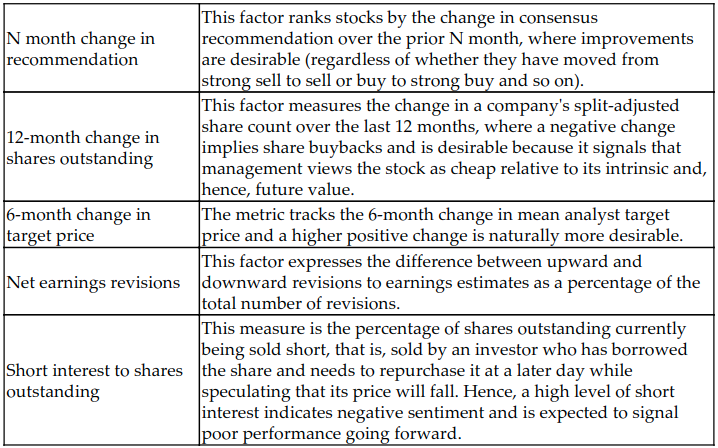

Key metrics:

- Momentum factors are typically derived from changes in price time series by identifying trends and patterns. They can be constructed based on absolute or relative return, by comparing a cross-section of assets or analyzing an asset's time series, within or across traditional asset classes, and at different time horizons. A few popular illustrative indicators are listed in the following table:

Value factors¶

Stocks with low prices relative to their fundamental value tend to deliver returns in excess of a capitalization-weighted benchmark. Value factors reflect this correlation and are designed to provide signals to buy undervalued assets, that is, those that are relatively cheap and sell those that are overvalued and expensive. For this reason, at the core of any value strategy is a valuation model that estimates or proxies the asset's fair or fundamental value. Fair value can be defined as an absolute price level, a spread relative to other assets, or a range in which an asset should trade (for example, three standard deviations).

Value strategies rely on mean-reversion of prices to the asset's fair value. They assume that prices only temporarily move away from fair value due to either behavioral effects, such as overreaction or herding, or liquidity effects such as temporary market impact or long-term supply/demand frictions. Since value factors rely on mean-reversion, they often exhibit properties opposite to those of momentum factors. For equities, the opposite to value stocks are growth stocks with a high valuation due to growth expectations.

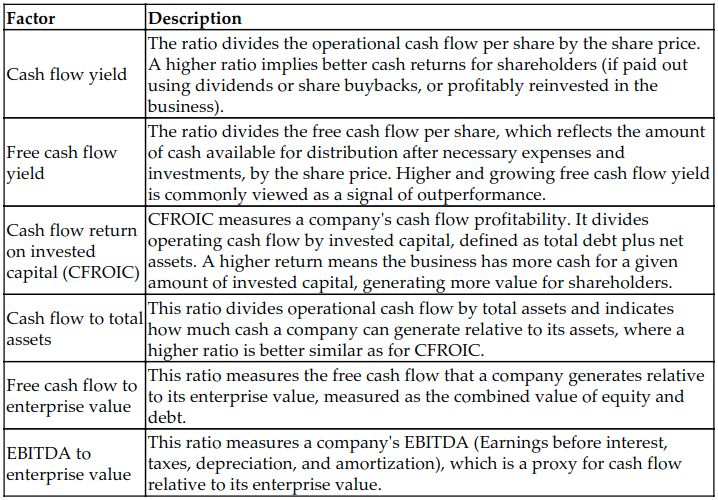

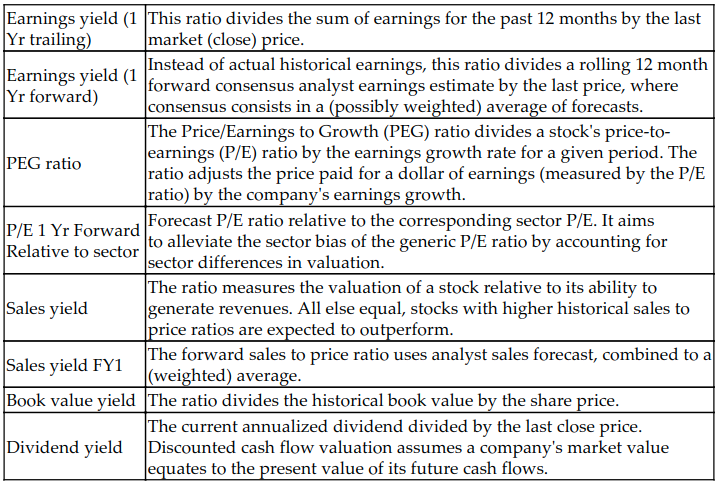

Key metrics

- There is a large number of valuation proxies computed from fundamental data. These factors can be combined as inputs into a machine learning valuation model to predict prices

Volatility and size factors¶

The low volatility factor captures excess returns on stocks with volatility, beta or idiosyncratic risk below average. Stocks with a larger market capitalization tend to have lower volatility so that the traditional size factor is often combined with the more recent volatility factor.

The low volatility anomaly is an empirical puzzle that is at odds with basic principles of finance. The Capital Asset Pricing Model (CAPM) and other asset pricing models assert that higher risk should earn higher returns, but in numerous markets and over extended periods, the opposite has been true with less risky assets outperforming their riskier peers.

Key metrics

- Metrics used to identify low volatility stocks cover a broad spectrum, with realized volatility (standard deviation) on one end, and forecast (implied) volatility and correlations on the other end. Some operationalize low volatility as low beta.

Quality factors¶

The quality factor aims to capture the excess return on companies that are highly profitable, operationally efficient, safe, stable and well-governed, in short, high quality, versus the market. The markets also appear to reward relative earnings certainty and penalize stocks with high earnings volatility. A portfolio tilt towards businesses with high quality has been long advocated by stock pickers that rely on fundamental analysis but is a relatively new phenomenon in quantitative investments. The main challenge is how to define the quality factor consistently and objectively using quantitative indicators, given the subjective nature of quality.

Strategies based on standalone quality factors tend to perform in a counter-cyclical way as investors pay a premium to minimize downside risks and drive up valuations. For this reason, quality factors are often combined with other risk factors in a multi-factor strategy, most frequently with value to produce the quality at a reasonable price strategy. Long-short quality factors tend to have negative market beta because they are long quality stocks that are also low volatility, and short more volatile, low-quality stocks. Hence, quality factors are often positively correlated with low volatility and momentum factors, and negatively correlated with value and broad market exposure.

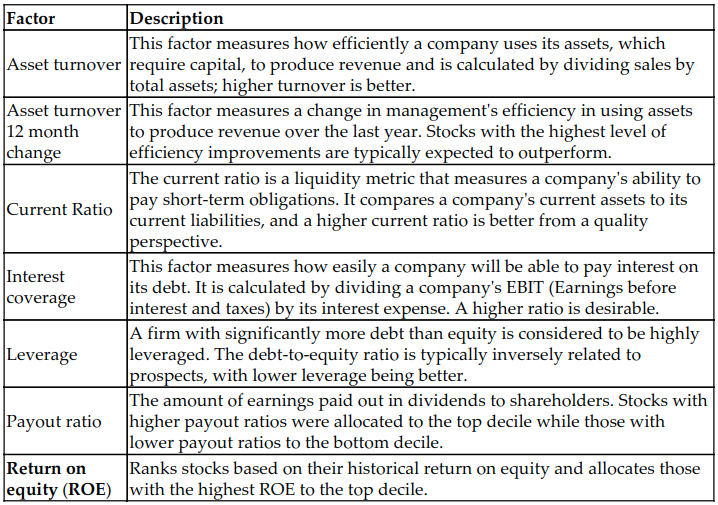

Key metrics

Quality factors rely on metrics computed from the balance sheet and income statement that indicate profitability reflected in high profit or cash flow margins, operating efficiency, financial strength, and competitiveness more broadly because it implies the ability to sustain a profitable position over time.

Hence, quality has been measured using gross profitability, return on invested capital, low earnings volatility, or a combination of various profitability, earnings quality, and leverage metrics, with some options listed in the following table.

Earnings management is mainly exercised by manipulating accruals. Hence, the size of accruals is often used as a proxy for earnings quality: higher total accruals relative to assets make low earnings quality more likely. However, this is not unambiguous as accruals can reflect earnings manipulation just as well as accounting estimates of future business growth:

Sourcing and managing data¶

The dramatic evolution of data in terms of volume, variety, and velocity is both a necessary condition and a driving force of the application of ML to algorithmic trading. The proliferating supply of data requires active management to uncover potential value, including the following steps:

- Identify and evaluate market, fundamental, and alternative data sources containing

alpha signals that do not decay too quickly.

- Deploy or access cloud-based scalable data infrastructure and analytical tools like

HadooporSparkSourcing to facilitate fast, flexible data access.

- Carefully manage and curate data to avoid look-ahead bias by adjusting it to the desired frequency on a point-in-time (PIT) basis. This means that

data may only reflect information available and know at the given time.

Efficient data storage with pandas¶

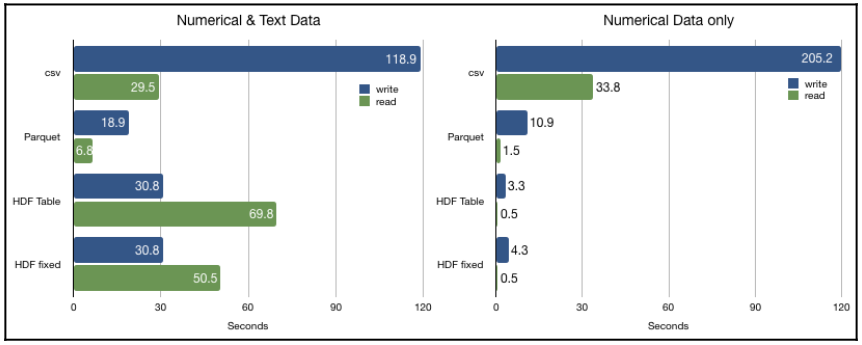

Comparing the main formats for efficiency and performance. In particular, we compare the following:

- CSV: Comma-separated, standard flat text file format

- HDF5: Hierarchical data format, developed initially at the National Center for Supercomputing, is a fast and scalable storage format for numerical data, available in pandas using the

PyTableslibrary.

- Parquet: A binary, columnar storage format, part of the

ApacheHadoopecosystem, that provides efficient data compression and encoding and has been developed by Cloudera and Twitter. It is available forpandasthrough thepyarrowlibrary.

The following charts illustrate the read and write performance for 100,000 rows with either 1,000 columns of random floats and 1,000 columns of a random 10-character string, or just 2,000 float columns:

- For purely numerical data, the HDF5 format performs best, and the table format also shares with CSV the smallest memory footprint at 1.6 GB. The fixed format uses twice as much space, and the parquet format uses 2 GB.

- For a mix of numerical and text data, parquet is significantly faster, and HDF5 uses its advantage on reading relative to CSV (which has very low write performance in both cases):

The alternative data revolution¶

The data deluge driven by digitization, networking, and plummeting storage costs has led to profound qualitative changes in the nature of information available for predictive analytics, often summarized by the five Vs:

- Volume: The amount of data generated, collected, and stored is orders of magnitude larger as the byproduct of online and offline activity, transactions, records, and other sources and volumes continue to grow with the capacity for analysis and storage.

- Velocity: Data is generated, transferred, and processed to become available near, or at, real-time speed.

- Variety: Data is organized in formats no longer limited to structured, tabular forms, such as CSV files or relational database tables. Instead, new sources produce semi-structured formats, such as JSON or HTML, and unstructured content, including raw text, image, and audio or video data, adding new challenges to render data suitable for ML algorithms.

- Veracity: The diversity of sources and formats makes it much more difficult to validate the reliability of the data's information content.

- Value: Determining the value of new datasets can be much more time-and resource-consuming, as well as more uncertain than before.

Today, investors can access macro or company-specific data in real-time that historically has been available only at a much lower frequency. Use cases for new data sources include the following:

- Online price data on a representative set of goods and services can be used to measure inflation.

- The number of store visits or purchases permits real-time estimates of company or industry-specific sales or economic activity.

- Satellite images can reveal agricultural yields, or activity at mines or on oil rigs before this information is available elsewhere.

ML allows for complex insights. In the past, quantitative approaches relied on simple heuristics to rank companies using historical data for metrics such as the price-to-book ratio, whereas ML algorithms synthesize new metrics, and learn and adapt such rules taking into account evolving market data. These insights create new opportunities to capture classic investment themes such as value, momentum, quality, or sentiment:

Momentum: ML can identify asset exposures to market

price movements, industry sentiment, or economic factorsValue: Algorithms can analyze large amounts of economic and industry-specific structured and unstructured data, beyond financial statements, to predict the intrinsic value of a company.

Quality: The sophisticated analysis of integrated data allows for the evaluation of

customer or employee reviews, e-commerce, or app trafficto identify gains in market share or other underlying earnings quality drivers.

Sources of alternative data¶

Alternative data sets are generated by many sources but can be classified at a high-level as predominantly produced by:

Individuals who post on social media, review products, or use search engines.

Businesses that record commercial transactions, in particular, credit card payments, or capture supply-chain activity as intermediaries.

Sensors that, among many other things, capture economic activity through images such as satellites or security cameras, or through movement patterns such as cell phone towers.

The market for alternative data¶

The investment industry is going to spend an estimated to $3,000,000,000 - 4,000,000,000 on data services in 2020, and this number is expected to grow at double digits per year in line with other industries. This expenditure includes the acquisition of alternative data, investments in related technology, and the hiring of qualified talent.

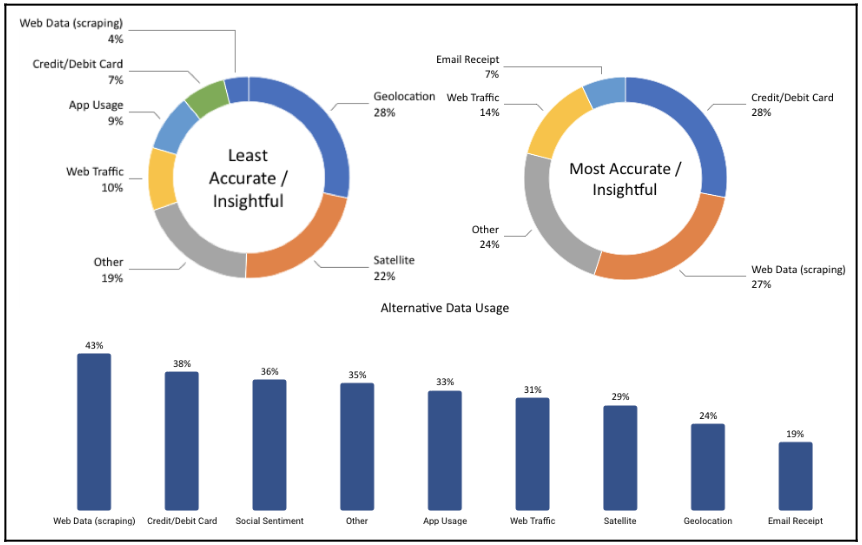

A survey by Ernst and Young shows significant adoption of alternative data in 2017; 43% of funds are using scraped web data, for instance, and almost 30% are experimenting with satellite data. Based on the experience so far, fund managers considered scraped web data and credit card data to be most insightful, in contrast to geolocation and satellite data, which around 25% considered to be less informative: