What Is Information Gain?¶

Information Gain, or IG for short, measures the reduction in entropy or surprise by splitting a dataset according to a given value of a random variable. A larger information gain suggests a lower entropy group or groups of samples, and hence less surprise.

Entropy quantifies how much information there is in a random variable, or more specifically its probability distribution. A skewed distribution has a low entropy, a distribution where events have equal probability will have larger entropy.

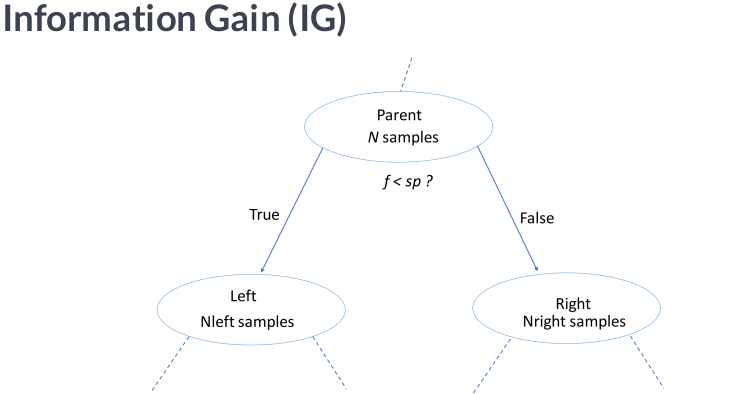

Information gain is commonly used in the construction of decision trees from a training dataset, by evaluating the information gain for each variable, and selecting the variable that maximizes the information gain between the parent node and its split nodes, which in turn minimizes the entropy and best splits the dataset into groups for best classification.

- In a binary case:

- Entropy is

0if all samples belong to the same class for a node (i.e., pure) - Entropy is

1samples contain both classes for a node (i.e., 50% for each class awful)

- Entropy is

Information quantifies how surprising an event is in bits. Lower probability events have more information, higher probability events have less information.

Skewed Probability Distribution (unsurprising): Low entropy.

Balanced Probability Distribution (surprising): High entropy.

Information gaincan also be used forfeature selection, by evaluating the gain of each variable in the context of the target variable. In this slightly different usage, the calculation is referred to asmutual informationbetween the two random variables.

Entropy example¶

In a binary classification problem, we can calculate the entropy of the data sample as follows:

- Entropy =

-(p(0) * log(P(0)) + p(1) * log(P(1)))

A dataset with a 50/50 split of samples for the two classes would have a maximum entropy (maximum surprise) of 1 bit, whereas an imbalanced dataset with a split of 10/90 would have a smaller entropy as there would be less surprise for a randomly drawn example from the dataset.

# calculate the entropy for a dataset

from math import log2

# proportion of examples in each class

class0 = 10/100

class1 = 90/100

# calculate entropy

entropy = -(class0 * log2(class0) + class1 * log2(class1))

# print the result

print(f'Entropy: {round(entropy,3)} bits')

Entropy can be used as a calculation of the purity of a dataset, e.g. how balanced the distribution of classes happens to be.

Information gain provides a way to use entropy to calculate how a change to the dataset impacts the purity of the dataset, e.g. the distribution of classes. A smaller entropy suggests more purity or less surprise.

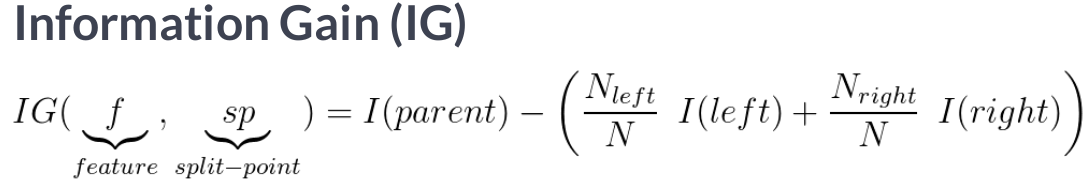

Calculating Information Gain¶

We can define a function to calculate the entropy of a group of samples based on the ratio of samples that belong to class 0 and class 1.

# calculate the entropy for the split in the dataset

def entropy(class0, class1):

return -(class0 * log2(class0) + class1 * log2(class1))

Now, consider a dataset with 20 examples, 13 for class 0 and 7 for class 1. We can calculate the entropy for this dataset, which will have less than 1 bit.

# split of the main dataset

class0 = 13 / 20

class1 = 7 / 20

# calculate entropy before the change

s_entropy = entropy(class0, class1)

print('Dataset Entropy: %.3f bits' % s_entropy)

Now consider that one of the variables in the dataset has 2 unique values, say value1 and value2. We are interested in calculating the information gain of this variable.

Let’s assume that if we split the dataset by value1, we have a group of 8 samples, 7 for class 0 and 1 for class 1. We can then calculate the entropy of this group of samples.

# split 1 (split via value1)

s1_class0 = 7 / 8

s1_class1 = 1 / 8

# calculate the entropy of the first group

s1_entropy = entropy(s1_class0, s1_class1)

print('Group1 Entropy: %.3f bits' % s1_entropy)

Now, let’s assume that we split the dataset by value2; we have a group of 12 samples with 6 in each group. We would expect this group to have an entropy of 1.

# split 2 (split via value2)

s2_class0 = 6 / 12

s2_class1 = 6 / 12

# calculate the entropy of the second group

s2_entropy = entropy(s2_class0, s2_class1)

print('Group2 Entropy: %.3f bits' % s2_entropy)

Lastly, we can calculate the information gain for this variable based on the groups created for each value of the variable and the calculated entropy.

The first variable resulted in a group of 8 examples from the dataset, and the second group had the remaining 12 samples in the data set. Therefore, we have everything we need to calculate the information gain.

In this case, information gain can be calculated as:

Entropy(Dataset) – Count(Group1) / Count(Dataset) * Entropy(Group1) + Count(Group2) / Count(Dataset) * Entropy(Group2)

Or:

Entropy(13/20, 7/20) – 8/20 * Entropy(7/8, 1/8) + 12/20 * Entropy(6/12, 6/12)

# calculate the information gain

gain = s_entropy - (8/20 * s1_entropy + 12/20 * s2_entropy)

print('Information Gain: %.3f bits' % gain)

Using information theory to evaluate features¶

The mutual information (MI) between a feature and the outcome is a measure of the mutual dependence between the two variables. It extends the notion of correlation to nonlinear relationships. More specifically, it quantifies the information obtained about one random variable through the other random variable. MI determines how different the joint distribution of the pair (X,Y) is to the product of the marginal distributions of X and Y.

The sklearn function implements feature_selection.mutual_info_regression that computes the mutual information between all features and a continuous outcome to select the features that are most likely to contain predictive information. There is also a classification version (see the documentation for more details).

%matplotlib inline

import warnings

from datetime import datetime

import os

from pathlib import Path

import quandl

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

import pandas_datareader.data as web

from pandas_datareader.famafrench import get_available_datasets

from pyfinance.ols import PandasRollingOLS

from sklearn.feature_selection import mutual_info_classif

warnings.filterwarnings('ignore')

sns.set(style="darkgrid", color_codes=True)

Get Data¶

'read'

with pd.HDFStore('/home/aj/Research Notebooks_py/Hands-On-Machine-Learning-for-Algorithmic-Trading/data/assets.h5', mode='r') as store:

data = store['engineered_features']

data.head()

data.info()

data.isna().sum()

data = data.dropna()

data.info()

Create Dummy variables¶

dummy_data = pd.get_dummies(data,

columns=['year','month', 'msize', 'Sector'],

prefix=['year','month', 'msize', ''],

prefix_sep=['_', '_', '_', ''])

dummy_data = dummy_data.rename(columns={c:c.replace('.0', '') for c in dummy_data.columns})

dummy_data.info()

dummy_data.head()

dummy_data.describe()

Mutual Information¶

Original Data¶

target_labels = [f'target_{i}m' for i in [1,2,3,6,12]]

targets = data.dropna().loc[:, target_labels]

features = data.dropna().drop(target_labels, axis=1)

features.Sector = pd.factorize(features.Sector)[0]

cat_cols = ['year', 'month', 'msize', 'Sector']

discrete_features = [features.columns.get_loc(c) for c in cat_cols]

mutual_info = pd.DataFrame()

for label in target_labels:

mi = mutual_info_classif(X=features,

y=(targets[label]>0).astype(int),

discrete_features=discrete_features,

random_state=42

)

mutual_info[label] = pd.Series(mi, index=features.columns)

mutual_info.sum()

Normalized MI Heatmap¶

fig, ax= plt.subplots(figsize=(15, 4))

sns.heatmap(mutual_info.div(mutual_info.sum()).T, ax=ax);

Dummy Data¶

target_labels = [f'target_{i}m' for i in [1, 2, 3, 6, 12]]

dummy_targets = dummy_data.dropna().loc[:, target_labels]

dummy_features = dummy_data.dropna().drop(target_labels, axis=1)

cat_cols = [c for c in dummy_features.columns if c not in features.columns]

discrete_features = [dummy_features.columns.get_loc(c) for c in cat_cols]

mutual_info_dummies = pd.DataFrame()

for label in target_labels:

mi = mutual_info_classif(X=dummy_features,

y=(dummy_targets[label]> 0).astype(int),

discrete_features=discrete_features,

random_state=42

)

mutual_info_dummies[label] = pd.Series(mi, index=dummy_features.columns)

mutual_info_dummies.sum()

fig, ax= plt.subplots(figsize=(4, 20))

sns.heatmap(mutual_info_dummies.div(mutual_info_dummies.sum()), ax=ax);