Dimensionality Reduction¶

- Dimensionality reduction produces new data that captures the most important information contained in the source data. Rather than grouping existing data into clusters, these algorithms transform existing data into a new dataset that uses significantly fewer features or observations to represent the original information.

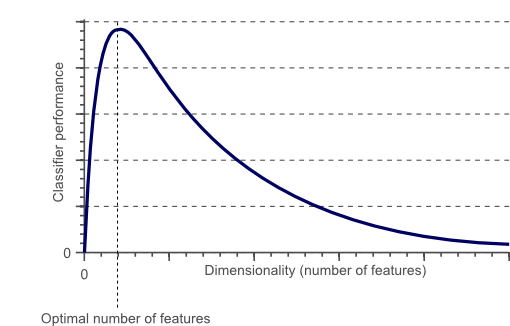

- As the number of features increases, the model becomes more complex. Having more features increases the likelihood of overfitting. A machine learning model that is trained on a large number of features, gets increasingly dependent on the training data and in turn could overfit, resulting in poor performance on new data.

- Feature Extraction technique advantages:

- Improve accuracy.

- Reduce overfitting.

- Faster training.

- Improve Data Visualization.

- Increase model explainability.

- Another commonly used technique to reduce the number of feature in a dataset is Feature Selection. The difference between Feature Selection and Feature Extraction is that

feature selection aims instead to rank the importance of the existing features in the dataset and discard less important ones.

Unsupervised dimensionality reduction¶

- If your number of features is high, it may be useful to reduce it with an unsupervised algorithm prior to fitting a supervised algorithm. Many of the Unsupervised learning methods implement a transform method that can reduce the dimensionality.

Walk through¶

- Kaggle Mushroom Classification Dataset as an example. Our objective will be to try to predict if a Mushroom is poisonous or not given the features.

Imports¶

In [1]:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

import matplotlib.patches as mpatches

from pylab import rcParams

import seaborn as sns

from matplotlib.pyplot import figure

import plotly.graph_objs as go

from plotly.offline import init_notebook_mode, iplot

from sklearn.utils import shuffle

from sklearn import preprocessing

from sklearn.preprocessing import LabelEncoder

from cycler import cycler

import warnings

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report,confusion_matrix

from sklearn.ensemble import RandomForestClassifier

from yellowbrick.classifier import ConfusionMatrix

from yellowbrick.classifier import ROCAUC

from yellowbrick.classifier import PrecisionRecallCurve

from yellowbrick.classifier import ClassificationReport

warnings.filterwarnings('ignore')

plt.rcParams['axes.prop_cycle'] = cycler(color='brgy')

Load and summarize data

In [2]:

df = pd.read_csv('mushrooms.csv')

pd.options.display.max_columns = None

df.info()

View data

In [3]:

df.head()

Out[3]:

Plot target classes

In [4]:

total = len(df)

plt.figure(figsize=(13,5))

plt.subplot(121)

g = sns.countplot(x='class', data=df)

g.set_title("Mushroom class Count \np: Poisonous | e: Edible", fontsize=14)

g.set_ylabel('Count', fontsize=14)

for p in g.patches:

height = p.get_height()

g.text(p.get_x()+p.get_width()/2.,

height + 5,

'{:1.2f}%'.format(height/total*100),

ha="center", fontsize=14, fontweight='bold')

plt.margins(y=0.1)

plt.show()

In [5]:

df['class'].value_counts()

Out[5]:

Split features and target

In [6]:

X = df.drop(['class'], axis = 1)

Y = df['class']

Onehot encode categorical variables

In [7]:

X = pd.get_dummies(X, prefix_sep='_')

X.head()

Out[7]:

In [8]:

print(f'Original coulumn count = {len(df.columns)}, Onehot encolded column count = {len(X.columns)}')

Label encode target variable

In [9]:

Y = LabelEncoder().fit_transform(Y)

Y

Out[9]:

Scale features

In [10]:

X = StandardScaler().fit_transform(X)

Function to train, test and score a RandomForestClassifier

In [11]:

# Classifier

trainedforest = RandomForestClassifier(n_estimators=700)

In [12]:

def forest_test(X, Y):

X_Train, X_Test, Y_Train, Y_Test = train_test_split(X, Y, test_size = 0.30, random_state=0)

# Classifier

trainedforest = RandomForestClassifier(n_estimators=700).fit(X_Train,Y_Train)

f, axes = plt.subplots(1,3 ,figsize=(15,5))

preds = trainedforest.predict(X_Test)

classes = list(df['class'].unique())

cm = ConfusionMatrix(

trainedforest, classes=classes, ax = axes[0],

label_encoder={0: 'Poisonous', 1: 'Edible'})

cm.fit(X_Train, Y_Train)

cm.score(X_Test, Y_Test)

axes[0].set_title('Confusion Matrix')

axes[0].set_xlabel('Predicted Class')

axes[0].set_ylabel('True Class')

roc = ROCAUC(trainedforest, classes=["Poisonous", "Edible"], ax = axes[1])

roc.fit(X_Train, Y_Train)

roc.score(X_Test, Y_Test)

axes[1].set_title('ROC AUC')

axes[1].grid(False)

axes[1].legend()

prc = PrecisionRecallCurve(trainedforest, ax = axes[2])

prc.fit(X_Train, Y_Train)

prc.score(X_Test, Y_Test)

axes[2].set_title('Precision Recall Curve')

axes[2].grid(False)

axes[2].legend()

plt.tight_layout()

plt.show();

print('\n',classification_report(Y_Test,preds))

Functions to test and plot 2d and 3d representations of the features vs the target

In [13]:

def complete_test_2D(X, Y, plot_name = ''):

Small_df = pd.DataFrame(data = X, columns = ['C1', 'C2'])

Small_df = pd.concat([Small_df, df['class']], axis = 1)

Small_df['class'] = LabelEncoder().fit_transform(Small_df['class'])

forest_test(X, Y)

plt.figure.figsize=(10,8)

classes = [1, 0]

colors = ['r', 'b']

for clas, color in zip(classes, colors):

plt.scatter(Small_df.loc[Small_df['class'] == clas, 'C1'],

Small_df.loc[Small_df['class'] == clas, 'C2'],

c = color, alpha=0.5)

plt.xlabel('Component 1', fontsize = 12)

plt.ylabel('Component 2', fontsize = 12)

plt.title(f'{plot_name}', fontsize = 15)

plt.legend(['Poisonous', 'Edible'])

plt.grid(False)

plt.show()

In [14]:

def complete_test_3D(X, Y, plot_name = ''):

Small_df = pd.DataFrame(data = X, columns = ['C1', 'C2', 'C3'])

Small_df = pd.concat([Small_df, df['class']], axis = 1)

Small_df['class'] = LabelEncoder().fit_transform(Small_df['class'])

forest_test(X, Y)

fig=plt.figure(figsize=(8,6))

ax=fig.add_subplot(1,1,1, projection="3d")

pnt3d = ax.scatter(Small_df['C1'],Small_df['C2'],Small_df['C3'],

c=Small_df['class'],alpha=.5, s=75,cmap='coolwarm',

label=list(Small_df.columns))

one = mpatches.Patch(facecolor='b', label='0', linewidth = 0.5, edgecolor = 'black')

two = mpatches.Patch(facecolor='r', label = '1', linewidth = 0.5, edgecolor = 'black')

ax.set_title(f'{plot_name}', fontsize = 15)

ax.set(xlabel=f'\n{Small_df.columns[0]}',ylabel=f'\n{Small_df.columns[1]}',zlabel=f'\n{Small_df.columns[2]}')

ax.legend(handles=[one, two], title="class", fontsize='medium', fancybox=True)

plt.show()

Intital test

In [15]:

forest_test(X, Y)

Principal Component Analysis (PCA)¶

- When using PCA, we take as input our original data and try to find a combination of the input features which can best summarize the original data distribution to reduce its original dimensions. PCA is able to do this by maximizing variances and minimizing reconstruction error by looking at pair wised distances. In PCA, our original data is projected into a set of orthogonal axes and each of the axes gets ranked in order of importance.

- PCA is an unsupervised learning algorithm, therefore it doesn't care about the data labels but only about variation. This can lead in some cases to misclassification of data.

Testing first 2 principal components

In [16]:

from sklearn.decomposition import PCA

pca = PCA(n_components=2)

X_pca = pca.fit_transform(X)

PCA_df = pd.DataFrame(data = X_pca, columns = ['PC1', 'PC2'])

PCA_df = pd.concat([PCA_df, df['class']], axis = 1)

PCA_df['class'] = LabelEncoder().fit_transform(PCA_df['class'])

PCA_df.head()

Out[16]:

In [17]:

complete_test_2D(X_pca, Y, 'PCA')

First 2 principal components Explained Variance

In [18]:

var_ratio = pca.explained_variance_ratio_

cum_var_ratio = np.cumsum(var_ratio)

# create column names

col_num = X_pca.shape[1]

feat_names = ['PC'+str(num) for num in list(range(1,col_num+1,1))]

sns.barplot(y=var_ratio, x=feat_names)

sns.pointplot(y=cum_var_ratio, x=feat_names, color='black', label='cummulative')

plt.grid(False)

plt.title("Explained variance Ratio by each principal components", fontsize=14)

plt.ylabel("Explained variance ratio in percent")

plt.legend(['cummulative'])

plt.show()

Testing first 3 principal components

In [19]:

pca = PCA(n_components=3)

X_pca = pca.fit_transform(X)

complete_test_3D(X_pca, Y, 'PCA')

In [20]:

var_ratio = pca.explained_variance_ratio_

cum_var_ratio = np.cumsum(var_ratio)

# create column names

col_num = X_pca.shape[1]

feat_names = ['PC'+str(num) for num in list(range(1,col_num+1,1))]

sns.barplot(y=var_ratio, x=feat_names)

sns.pointplot(y=cum_var_ratio, x=feat_names, color='black', label='cummulative')

plt.grid(False)

plt.title("Explained variance Ratio by each principal components", fontsize=14)

plt.ylabel("Explained variance ratio in percent")

plt.legend(['cummulative'])

plt.show()

t-Distributed Stochastic Neighbor Embedding (t-SNE)¶

- t-SNE is non-linear dimensionality reduction technique which is typically used to visualize high dimensional datasets. Some of the main applications of t-SNE are Natural Language Processing (NLP), speech processing, etc…

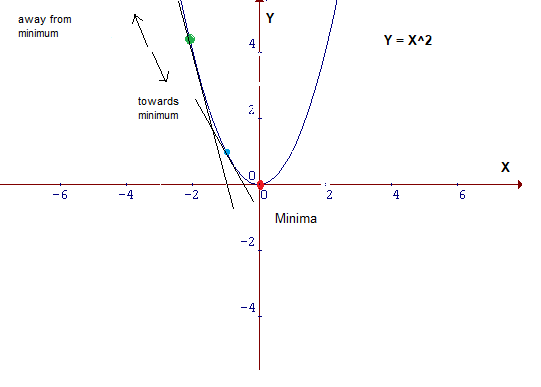

- t-SNE works by minimizing the divergence between a distribution constituted by the pairwise probability similarities of the input features in the original high dimensional space and its equivalent in the reduced low dimensional space. t-SNE makes then use of the Kullback-Leiber (KL) divergence in order to measure the dissimilarity of the two different distributions. The KL divergence is then minimized using gradient descent.

Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient.

X= parameter to optimizey= loss or cost function

- A Cost Function/Loss Function evaluates the performance of our Machine Learning Algorithm. The Loss function computes the error for a single training example while the Cost function is the average of the loss functions for all the training examples.

- The goal is to minimise the function. We need to find that value of

Xthat produces the lowest value ofy.

- A derivative is calculated using the power or chain rule as the slope of the cost function parabola at a particular point. The slope is described by drawing a tangent line to the graph at the point. This tangent line computes the desired direction to reach the minima. Partial derivative is calculated for > 2 parameters.

- 2 or more derivatives of the same function = Gradient

- This size of steps taken to reach the minimum is called Learning Rate.

- When using t-SNE, the higher dimensional space is modelled using a Gaussian Distribution, while the lower-dimensional space is modelled using a Student's t-distribution. This is done, in order to avoid an imbalance in the neighbouring points distance distribution caused by the translation into a lower-dimensional space.

Testing first 2 features t-sne

In [21]:

from sklearn.manifold import TSNE

tsne = TSNE(n_components=2, verbose=1, perplexity=40, n_iter=300)

X_tsne = tsne.fit_transform(X)

In [22]:

complete_test_2D(X_tsne, Y, 't-SNE')

Testing first 3 features t-sne

In [23]:

tsne = TSNE(n_components=3, verbose=1, perplexity=40, n_iter=300)

X_tsne = tsne.fit_transform(X)

complete_test_3D(X_tsne, Y, 't-SNE')

Independent Component Analysis (ICA)¶

- ICA is a linear dimensionality reduction method which takes as input data a mixture of independent components and it aims to correctly identify each of them (deleting all the unnecessary noise). Two input features can be considered independent if both their linear and not linear dependance is equal to zero.

- As a simple example of an ICA application, let’s consider we are given an audio registration in which there are two different people talking. Using ICA we could, for example, try to identify the two different independent components in the registration (the two different people). In this way, we could make our unsupervised learning algorithm recognise between the different speakers in the conversation.

- Using ICA, we can now again reduce our dataset to just three features, test its accuracy using a Random Forest Classifier and plot the results.

In [24]:

from sklearn.decomposition import FastICA

ica = FastICA(n_components=3)

X_ica = ica.fit_transform(X)

complete_test_3D(X_ica, Y, 'ICA')

Linear Discriminant Analysis (LDA)¶

- LDA is supervised learning dimensionality reduction technique and Machine Learning classifier.

- LDA aims to maximize the distance between the mean of each class and minimize the spreading within the class itself.

- When using LDA, is assumed that the input data follows a Gaussian Distribution and has similar variance, that values of each variable vary around the mean by the same amount on average.

- In this example, we will run LDA to reduce our dataset to just one feature, test its accuracy and plot the results.

In [25]:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

lda = LinearDiscriminantAnalysis(n_components=1)

# run an LDA and use it to transform the features

X_lda = lda.fit(X, Y).transform(X)

forest_test(X_lda, Y)

Locally linear embedding (LLE)¶

- Locally linear embedding (LLE) seeks a lower-dimensional projection of the data which preserves distances within local neighborhoods. It can be thought of as a series of local Principal Component Analyses which are globally compared to find the best non-linear embedding.

In [26]:

from sklearn.manifold import LocallyLinearEmbedding

embedding = LocallyLinearEmbedding(n_components=3)

X_lle = embedding.fit_transform(X[:1500])

complete_test_3D(X_lle, Y[:1500], 'LLE')

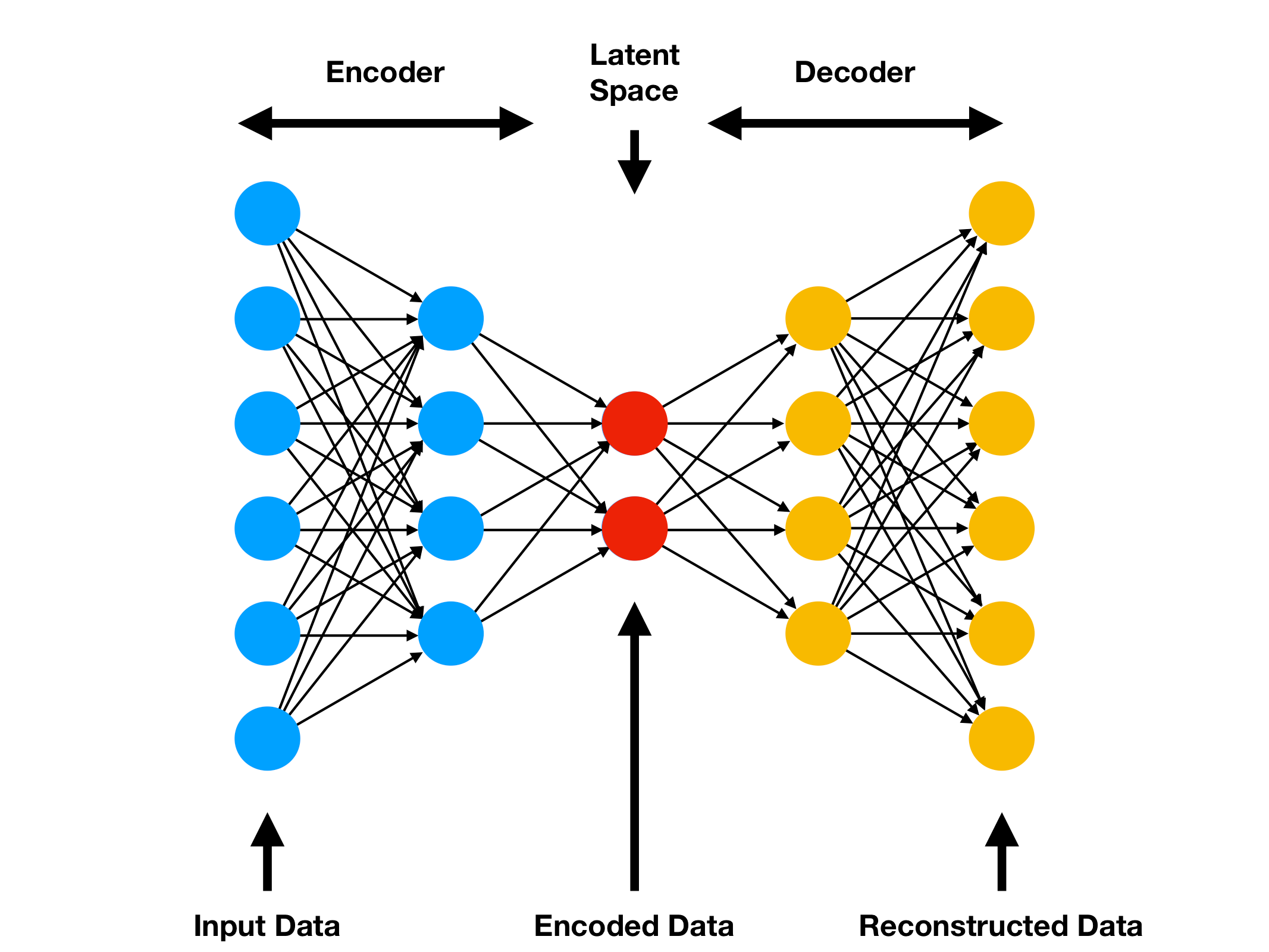

Autoencoders¶

- Autoencoders are a family of Machine Learning algorithms which can be used as a dimensionality reduction technique. The main difference between Autoencoders and other dimensionality reduction techniques is that Autoencoders use non-linear transformations to project data from a high dimension to a lower one.

- There exist different types of Autoencoders such as:

- Denoising Autoencoder

- Variational Autoencoder

- Convolutional Autoencoder

- Sparse Autoencoder

In this example, we will start by building a basic Autoencoder (Figure Below).

- The basic architecture of an Autoencoder can be broken down into 2 main components:

- Encoder: takes the input data and compress it, so that to remove all the possible noise and unhelpful information. The output of the Encoder stage is usually called bottleneck or latent-space.

- Decoder: takes as input the encoded latent space and tries to reproduce the original Autoencoder input using just it’s compressed form (the encoded latent space).

- If all the input features are independent of each other, then the Autoencoder will find particularly difficult to encode and decode to input data into a lower-dimensional space.

- Autoencoders can be implemented in Python using Keras API. In this case, we specify in the encoding layer the number of features we want to get our input data reduced to (for this example 3).

- As we can see from the code snippet below, Autoencoders take X (our input features) as both our features and labels (X, Y).

- For this example, I decided to use ReLu as the activation function for the encoding stage and Softmax for the decoding stage. If I wouldn’t have used non-linear activation functions, then the Autoencoder would have tried to reduce the input data using a linear transformation (therefore giving us a result similar to if we would have used PCA).

In [27]:

from keras.layers import Input, Dense

from keras.models import Model

input_layer = Input(shape=(X.shape[1],))

encoded = Dense(3, activation='relu')(input_layer)

decoded = Dense(X.shape[1], activation='softmax')(encoded)

autoencoder = Model(input_layer, decoded)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

X1, X2, Y1, Y2 = train_test_split(X, X, test_size=0.3, random_state=101)

autoencoder.fit(X1, Y1,

epochs=100,

batch_size=300,

shuffle=True,

verbose = 0,

validation_data=(X2, Y2))

encoder = Model(input_layer, encoded)

X_ae = encoder.predict(X)

In [28]:

complete_test_3D(X_ae, Y, 'Autoencoder')

In [ ]: